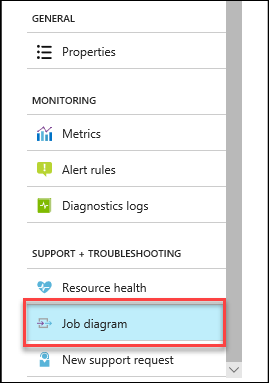

Azure SQL Analytics provides 2 views with the following functionality.

View for monitoring Databases and Elastic pools

View for monitoring Managed instances and Managed instance databases

The tiles in Azure SQL Analytics will be populated only if the Diagnostics logs for the Azure SQL Databases are configured to be sent to Log Analytics. With Azure SQL Analytics you will be able to monitor SQL errors, timeouts, blocks, database waits, query duration, CPU usage, Data IO usage, Log IO usage and Query waits statistics.

In the next post, we will look at how to provision and configure Azure SQL Analytics.