Attended TechEd this year (2013). It's been full of interesting sessions. It was so easy to pick my sessions as my prime focus was around Cloud, Database and Architecture sessions.

Missed the Keynote session as I didn't reach Auckland until 8pm on Tuesday (10/09/2013). Caught up with my HP friend for a Hot Chocolate and went to bed.

I was ready in the morning and caught up with Glassboy for breakfast and collected my Registration pack and was ready for Scott Guthrie's session on Cloud (of course Windows Azure)

It was a full on session went for 2 Parts.

I was playing with Windows Azure recently and I am impressed especially when Scott mentioned that they do numerous releases to production (for features) every day.

Then another important session I really enjoyed was "Largest SQL and Azure Projects in the World".

Glad to hear that SQL Server can handle Terabytes and Terabytes of data.....

There were other good sessions like "Benefits Dependency Network to bring business and IT changes together", "Panel discussion failure of DW projects", "Performance Tuning Analysis Services Cube" etc...

Food was ok but can cater better for Vegetarians and Vegans.

HUB was so crowded and not really keen on the stands this time.

Met my Swedish friend at the stands......

Next year TechEd.....Keen but need to see how the rest of year goes......

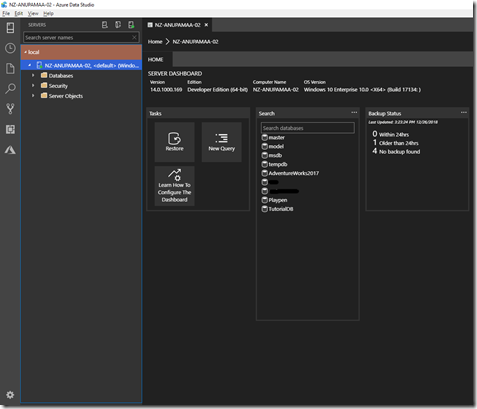

SQL Server 2019 is now generally available and announced at Microsoft Ignite 2019 on Day 1. SQL Server 2019 is an unified platform for enterprises to meet their business needs.

SQL Server 2019 is now generally available and announced at Microsoft Ignite 2019 on Day 1. SQL Server 2019 is an unified platform for enterprises to meet their business needs.